最新上映的《阿凡达:水之道》相信很多模友们都看过了

首周末IMAX全球票房达到了4880万美元

成为IMAX全球影史第二高的开画成绩先来看一段幕后花絮吧!

当詹姆斯-卡梅隆的《阿凡达》在2009年上映时,它展示了跨越表演捕捉、虚拟摄像机和原生立体摄影的若干技术和艺术突破。然后是视觉效果工作室Wētā FX(当时的weta数码)在从编辑好的表演中提取 "模板 "并将表演捕捉转化为CG角色时实现的几次飞跃,同时还实现了详细的面部装备和动画、纹理、渲染和合成。

直到2022年,《阿凡达:水之道》上映,卡梅隆的Lightstorm Entertainment公司和Wētā FX公司再次提高了视觉效果的标准--不仅仅是在表演捕捉和角色方面,还有影片中最大的亮点之一:水特效。

这部新电影将为实时表演捕捉带来进一步的发展,如新增加的动作捕捉服和HMCs、机器人眼线系统、实景模拟摄像机和实时深度合成。

更不用说在曼哈顿海滩制片厂120英尺长、60英尺宽、30英尺深的巨大水箱中对演员进行水下捕捉了--这甚至允许在演员从水面上走到水底时进行捕捉,反之亦然。当然,还有3D、高动态范围和高帧率的使用。

水下运动捕捉

Wētā FX,也在许多不同的领域为《水之道》达到了新的高度。首先是在面部装备和动画方面,工作室从混合形状转向新的肌肉应变系统(内置神经网络),可以由表演捕捉和当然由动画师驱动。

然后,对于影片中许多不同类型的CG水--海浪、珊瑚礁、水下水流、气泡、泡沫、人物等等--Wētā在他们的内部模拟框架Loki中对流体求解器进行了深入的研发,同时还使用了许多其他工具,包括专有的基于物理的渲染器Manuka。

以下是国外媒体对于水之道特效团队的采访包含高级视觉效果总监 Joe Letteri、Wētā FX 高级视觉效果总监 Eric Saindon 和 Wētā FX 高级动画总监Daniel Barrett,解密了特效的相关制作细节:

左起:Joe Letteri、Eric Saindon、Dan Barret

对于这部电影的现场表演捕捉,您需要解决哪些新问题,尤其是水?

Joe Letteri: 嗯,捕捉实际上是由Lightstorm的团队负责的,-Jim就是在那里进行表演捕捉的,该团队使用了一个带有造浪器的大坦克。他们的方法是建立一个动作捕捉体积,并将其沉入水箱中,然后在水箱上方构建另一个空间,因为我们需要在水里和水外的动作。角色会弹起并跳入水中,所以这两者必须一起工作。它们在理念上相似,但在实践中它们是不同的。

我们从使用光学光转向使用红外光,因为红外光在很多情况下都能解决光学光的问题。你不会得到杂散的反射和类似的东西,但是你不能在水下使用,因为红色的波长在几英尺内就会被吸收,所以这不起作用。

Joe Letteri: Well, the capture itself was actually handled by the team at Lightstorm, rather than us–that’s where Jim did his performance capture, with that team using a big tank with a wave mover. Their approach was to build a motion capture volume and sink it into the tank, then build another volume that was above the tank, because we needed action in and out of the water. Characters bounce up and jump into the water, so the two had to work together. They’re similar in idea, but in practice they’re different.

Back on Apes, we switched from using optical light to using infrared light because it works in a lot of situations where optical light has problems. You don’t get stray reflections and things like that, however you can’t use that underwater because the red wavelengths get absorbed within a few feet, so that doesn’t work.

水中的白球允许在水体积上方和下方之间划定界限

Ryan Champney 是 Lightstorm 巨人团队的一员,他想出了一种超蓝光,几乎是紫外线的光,用于此。它在水下是超蓝的,在水面上是红外线。然后我们将这两卷放在一起,意识到我们实际上可以通过这种方式跟踪来获得不错的数据。那是一个巨大的胜利,因为在某些时候我们认为,'好吧,这可能会成为一个非常好的参考。但事实上它是非常有用的数据,对吧丹?我们能够非常干净地使用其中的很多内容。

Dan Barrett:我们是,是的。显然,得到演员的支持真是太棒了。首先,他们学会了如何舒适地呆在水下——而且他们可以在水下呆很长时间。然后,一旦他们感到舒服,他们就会在那里为我们表演所有这些精彩的表演,这真是太棒了。

Joe Letteri:所以,我们会让演员在水下游泳。现在,很明显,人类不能像适应水下的实际 Metkayina 那样快,但您仍然可以获得正确的游泳动作和浮力。而且您获得的性能只有在浸入水中时才有可能,而不是尝试以干湿方式进行。

然后,动画团队会接管并说,“好吧,好吧,我看到游泳了,但这需要是一个由尾巴推动的动力划水。” 因此,该团队将添加这些额外的动作并对其进行扩展,以此为基础构建适当的角色表演。

It was Ryan Champney, part of the Giant team at Lightstorm, who came up with a super blue, almost ultraviolet, light that was used for that. It’s super blue underwater and infrared above the water. We then put the two volumes together and realised we could actually get decent data by tracking it that way. That was the big win, because at some point we thought, ‘Well, this could turn out to just be really good reference.’ But in fact it turned out to be pretty usable data, right Dan? We were able to use a lot of it pretty cleanly.

Dan Barrett: We were, yeah. Obviously, it was fantastic to have buy-in from the cast. First of all, they learned how to stay underwater comfortably–and they could stay underwater for a long time. Then once they were comfortable, they gave us all these great performances down there, which was pretty amazing.

Joe Letteri: So, we’d have actors swimming underwater. Now, obviously humans can’t go as fast as the actual Metkayina who are adapted for underwater, but you still get correct swimming motion and buoyancy. And you get a performance that is only possible when you’re submerged in water, as opposed to trying to do it dry for wet.

Then, the animation team would take over and say, ‘Okay, well, I see the swim, but this needs to be a power stroke that’s propelled by a tail.’ So the team would add those extra motions and extend it, building a proper character performance from that basis.

Eric,就身体和面部动作而言,在片场做了哪些可能是新技术?

Eric Saindon:就现场面部捕捉而言,我们并没有做太多不同,只是转向两个摄像头以获得更好的捕捉信息。它为您提供了更多的面部形状和更好的运动信息。嘴唇上的细节也是如此。

现场的其他重大技术差异是实时深度合成之类的东西,它使我们能够在相机中正确地分层。以前我们会把 A 放在 B 上,或者 B 放在 A 上,但是如果 Quaritch 走在 Spider 前面,比如说,然后又走到 Spider 后面,我们以前就无法做到这一点。

现在有了实时深度合成,你实际上可以将角色正确地放置在空间中,并获得正确的构图。由于身高差异,如果 Quaritch [阿凡达形式的 Stephen Lang] 应该在 Spider [Jack Champion,一个人类角色] 的后面,他太大了以至于在构图方面,它会有点古怪。你不会知道他有多大,而且他与 Spider 的比例也会发生偏差。有了我们的新系统,Jim 对镜头的内容、构图方式、现场拍摄方式有了真正的了解。

这种“深度补偿”与新的眼线系统结合使用,这是从体育游戏中偷来的——摄像机在电线上,就像你在 NFL 比赛中看到的那样。我们有这样的设置,当 Spider 与 Quaritch 互动时,它允许我们在 Quaritch 头部的正确位置放置一个漂浮在太空中的视频监视器。它展示了 Stephen Lang 的捕捉表演,并与 CG 角色在场景中的动作同步移动,这让 Jack Champion 可以清楚地看到应该看哪里,以及时机和表演结束。这反过来又给了我们更好的表现,让我们能够以一种很好的方式使用我们新的面部系统和我们的动画。

Eric Saindon: As far as the on-set facial capture goes, we didn’t do a lot different other than shifting to two cameras to get better capture information. It gives you a lot more shape in the face and better information on the motion. The detail on the lips, too, things like that.

The other big tech differences on set were things like the real-time depth compositing, which allowed us to layer things properly in camera. Previously we would’ve put either A over B, or B over A, but if Quaritch walked in front of Spider, say, and then behind Spider, we wouldn’t have been able to get that previously.

Now with the real-time depth compositing, you can actually place the characters properly in space, and get the proper composition. Because of the height difference, if Quaritch [Stephen Lang in Avatar form] was supposed to be behind Spider [Jack Champion, a human character], he’s so big that composition-wise, it would’ve been a little wacky on set. You wouldn’t have known how big he was and his proportions in relation to Spider would have been off. With our new system, Jim gets a really great idea of what the shots are going be, how to compose them, how to shoot them while on set.

That ‘depth comp’ was used in combination with the new eyeline system, which is kind of stolen from sports games–the camera is on a wire like the ones you see running over an NFL game, say. We had a setup like that, which allowed us to put a video monitor floating in space at the right location for Quaritch’s head when Spider is interacting with him. It showed Stephen Lang’s capture performance and moved in sync with the CG character’s movements in the scene, which gave Jack Champion an eyeline for where to look, as well as timing and a performance to play off. This in turn gave us a better performance and allowed us to use our new facial system and our animation in a great way.

蜘蛛侠(Jack Champion)在片场

真人版的蜘蛛和CG版的Avatars,以及Na'vi和其他角色的整合。我只是认为那是无缝的。你能多谈谈这种合成和整合吗?

Eric Saindon:嗯,我认为一切都从头开始。很多次你在绿幕上拍摄一个角色,你最终拍摄的大致是你认为该角色将要做的事情。在构图方面,你可能会拍得有点奇怪,然后你必须把它放在一张卡片上,让它符合你想要的表现。

在这里,一切都是在几乎是戏剧环境中通过表演捕捉完成的,所有演员一起经历了整个场景,没有任何摄像机——所有演员都知道这个过程。然后当涉及到真人表演时,杰克明白那场戏是什么,因为他已经表演过了。

这也意味着吉姆知道他会得到什么。他可以看到其他演员与杰克一起表演,作为 CG 角色与蜘蛛一起。它为角色之间的互动提供了更好的构图和时间安排。这意味着我们可以很好地了解镜头将要拍摄的内容,或者哪个底板将与其他底板配合使用。它只是让我们走上了一条伟大的道路。

Eric Saindon: Well, I think it all starts at the very beginning. So many times you shoot a character on a greenscreen and you end up shooting roughly what you think that character’s going to do. Composition-wise, you might shoot it a little bit weird, then you have to put it on a card and make it fit with the performance you want.

Here, everything was done with performance capture in almost a theatrical setting, with all of the actors together going through an entire scene without any cameras–all the actors knew the process. Then when it came to live action, Jack understood what the scene was because he’s performed it already.

It also meant that Jim knew what he was going to get. He could see the other performers acting with Jack, as CG characters with Spider together. It allowed for a much better composition and timing for the interaction between the characters. It meant we could get a great idea of what the shot was going to be, or what plate was going to work with the others. It just set us down a great path.

Wētā FX 凭借其面部系统在数字人物和角色领域做了很多工作——你们在这里采用的新方法是什么?

Joe Letteri:对我来说,它来自自 Gollum 以来我们一直使用的系统。我们只是在努力克服它的局限性,所以我对如何解决它有一个想法,但实际上 Dan 不得不处理它。

Dan Barrett:多年来,我们一直在使用我们的 FACS 系统。它不是一个基于肌肉的系统,它基本上是一个基于面部表面的混合形状系统。它更多地是关于分解的表达式以及当动画师使用滑块时它们如何组合。你可以用它做非常棒的情感表演,但你很容易偏离模型。你可以很容易地把不应该组合的东西组合起来,然后突然间你不太清楚自己的角色,你想知道发生了什么,所以你必须回去弄清楚发生了什么。有时您甚至必须重建它。

我认为过去我们一直擅长情感上合理的工作,但我们真的希望在解剖学上更加合理。这个新系统使用肌肉拉伤而不仅仅是表面。我们基本上是通过重建演员的面部来测量演员面部的肌肉拉伤,然后将其应用于角色。

所有这些形状,每一个动作,都是基于从演员面部捕捉到的数据集。从立体摄像机——面部摄像机——我们可以从表演者那里得到奇妙的解决方案,作为一种动画工具仍然是合理的。

我们与之互动的方式,我们可以在脸上拉点,所以如果你想让某人微笑,你就把那些角拉回来,它会允许这种情况发生,但不会让它走得太远。也许如果你把脸颊向上推,它可能会更进一步,因为那是一个更饱满的笑容。因此,它既是一个非常强大的解决工具,可以从当天开始获得这些表演,同时也是动画师的一个很好的工具,因为它让我们保持一致。

Joe Letteri: For me, it came out of the system we’ve been using ever since Gollum. We were just banging our heads up against the limitations of it, so I had an idea for how to solve it, but Dan actually had to deal with it.

Dan Barrett: We’ve been using our FACS system for years. It wasn’t a muscle based system, it was basically a blend shape system based on the surface of the face. It was more about decomposed expressions and how they combined when the animator used the sliders. You can do really great emotional performances with that, but you can quite easily get off- model. You can quite easily combine things that shouldn’t be combined, then all of a sudden you can’t quite recognize your character and you wonder what’s going on, so you have to go back in and work out what’s happened. Sometimes you’d even have to rebuild it.

I think in the past we’ve been good at that emotionally plausible work, but we really wanted to be much more anatomically plausible. This new system uses muscle strains rather than just surface faces. We essentially measured the muscle strains from the actor’s face by reconstructing their faces, which could then be applied to the characters.

All of these shapes, every single movement, were based on a data set that had been captured from the actors’ faces. From the stereo cameras–the face cam–we could get fantastic solves from the performers that as an animation tool remained plausible.

The way that we interacted with it, we could pull points on the face, so if you wanted someone to smile, you pulled those corners back, and it would allow that to happen, but it wouldn’t allow it to go too far. Maybe if you pushed the cheek up, it could go a bit further, because that’s a fuller smile. So it was both an incredibly powerful solving tool to get those performances from the day, while being a great tool for animators as it kept us in line.

Wētā FX为这部电影解决的一个巨大问题是水。你有正常的海浪,撞击的海浪,当然还有水下的海浪。人物从上到下,从下到上。乔,关于你是如何开始接触水的,你能说点什么吗?

Joe Letteri:是的,我们还有头发和戏服进出水面,这些东西必须协同工作。这是我们在拍完第一部电影后开始思考的另一件事,就是“你如何让它变得更好?”

关于水有两个棘手的事情。一是我们都知道它的各种形式;飞溅、气泡、泡沫,任何你可以用水做的事情。但是每一种东西都是不同的形式——一个大水池和一个海洋是不同的,翻滚的波浪和破浪是不同的,在水中游泳的生物和在水中航行的船是不同的。水,这与跳出水面的生物不同。它们都是不同的东西。

我们意识到我们无法用一个求解器真正解决所有问题,因为每种形式都有不同的状态。大量水对空气阻力的反应与小颗粒的反应不同,因为您正在改变它们的形状或者您正在改变形状和比例。我们意识到水以所有这些不同的状态存在,因此我们在 Loki 中编写了一套求解器,每个求解器都针对最佳状态进行了调整。

Loki 所做的基本上是解析模拟并将正确的解算器分配给正确的状态,然后在两者之间留出空间。艺术家可以控制诸如混合点之类的东西以及他们将这些解算器移动到哪里。这让我们可以回头说,“是的,现在我们可以一次解决它。” 我们并行运行所有求解器,每个求解器都分配了它们的一部分。

这就是我们决定采用的方法。我不知道你怎么能用其他方式做到这一点。另外,它还有一个优势,就是我们可以将它分解成并行的多台机器。大的解决方案实际上可以分解成多台机器。我们可以解决整个水域的问题。例如,当我们在水中有一堆船时,我们可以在船的上方和周围进行高分辨率解析,并将其混合到更大的水箱中。这就是我们几年前打造 Loki 的原因,让这部电影继续下去。

Joe Letteri: Yeah, and we also had hair and costumes in and out of the water, these things all had to work together. It’s another one of those things that we started thinking about after we did the first film, just “How do you make this better?”

There are two tricky things about water. One is we all know what it looks like, in all its forms; splashes, bubbles, foam, anything you can do with water. But each of those things is a different form – a big swimming pool of water is different to an ocean of water, and a rolling wave is different to a breaking wave, and a creature swimming through the water is different to a boat moving through the water, which is different to a creature jumping out of the water. They’re all different things.

We realized that we couldn’t really solve all that with one solver, because there are different states for each form. Bulk water will react to air drag differently to small particles because you’re changing the shape of them or you’re changing the shape and the scale. What we realized is that water exists in all these different states, so we wrote a suite of solvers in Loki, each one tuned for the optimal state.

What Loki does is essentially parses the simulation and assigns the correct solver to the correct state, before allowing room to blend in between the two. The artists have control over things like blend points and where they move those solvers. This allows us to go back and say, ‘Yes, now we can solve it in one pass.’ We’re running all the solvers in parallel, each one allocated their portion of it.

That was the approach we decided to take. I don’t know how you could have done it any other way. Plus It also had advantage that we could break it out into multiple machines in parallel. The big solves could actually be broken out into multiple machines. We could do solves all across the water. For example, when we had a bunch of boats in the water, we could do high-res solves over and around the boats and blend it into the bigger tank. That was why we built Loki a few years ago, to get this film going.

埃里克,关于水下的“外观”,内部以及与Jim之间有什么样的对话?

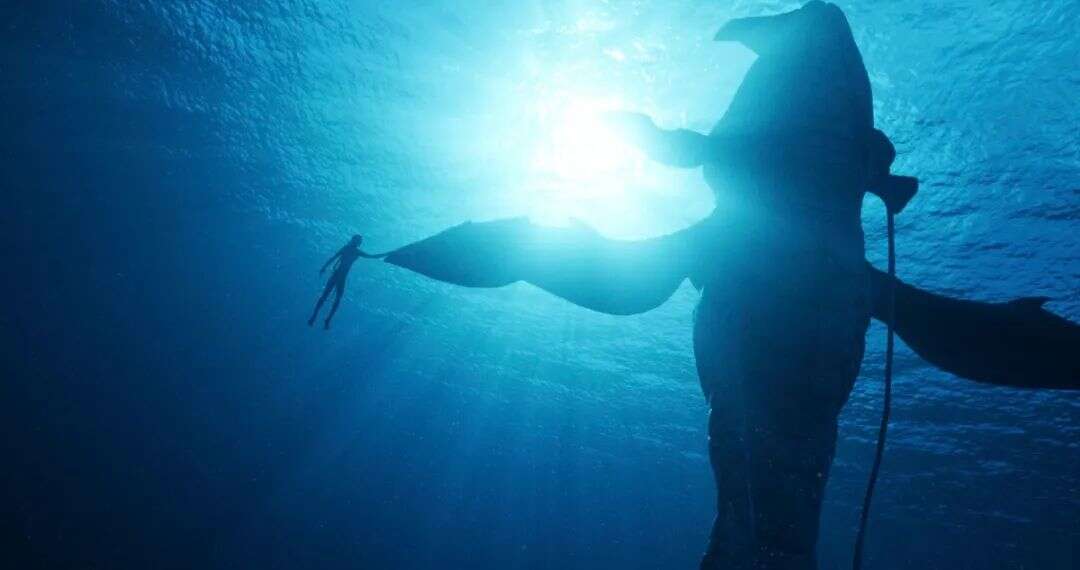

Eric Saindon:嗯,Jim 有点像水上和水下摄影方面的专家,尤其如此。你看水下生物的方式,你看着 Tulkun,你会想到“鲸鱼”,但 Tulkun 实际上是鲸鱼的五倍大。所以当你在水下时你想要微粒,因为你想要感受介质,尤其是当你在立体声中观看时。你想要感受你周围的水,你不希望它们看起来像漂浮在空气中,所以你必须添加微粒。但是,您不想过度,因为在现实中您不会看到超过 5 米,并且您希望能够感受角色并了解距离。

因此,我们必须根据场景使用很多不同的东西。你想要微粒,你想要能够在水下看到一点点红色。它们下降得如此之快,以至于现实是每一个镜头中的一切都是蓝色的,因为一切的规模。观众希望看到某种颜色,如今观众在理解镜头和理解 CG 方面变得更加聪明,如果你假装太多,你就会失去他们。如果你过多地陷入“一切都会是蓝色的,你永远看不到任何东西”的现实中,那么这会让观众有点脱离电影,所以它总是一个很好的平衡。吉姆非常善于了解这种平衡,并指导我们获得他想要的拍摄“感觉”。

Eric Saindon: Well, Jim is a bit of an expert on water and underwater photography, especially. The way you look at the creatures underwater, you look at a Tulkun and you think ‘whale’, but a Tulkun is really five times the size of a whale. So when you get underwater you want the particulate, because you want to feel the medium, especially when you’re watching it in stereo. You want to feel that water around you, you don’t want them to look like they’re floating in air, so you have to add particulate. But, you don’t want to overdo it because then in reality you wouldn’t see more than five meters, and you want to be able to feel the characters and understand the distance.

So, we had to play with lots of different things, depending on the scene. You want to have the particulate, you want to be able to see a little bit of the reds in the underwater. They drop off so quickly that the reality is everything would be blue in every shot, because of the scale of everything. The audience expects to see a certain color, and the audience is so much smarter these days on understanding shots and understanding CG that if you fake it too much, you’ll lose them. If you go too much into the reality of, ‘Everything would be blue, you wouldn’t ever see anything,’ then it takes the audience out of the film a little, so it’s always a fine balance. Jim was really good at knowing that balance, and guiding us along for the ‘feel’ he wanted for shots.

就水中的生物而言,我特别喜欢 skimwings。我只是想问你关于像在水里和水上这样的东西的动画挑战。

丹·巴雷特:他们是有趣的生物。我们主要研究飞鱼,因为它们的翅膀和地面效应以及它们的飞行。基于我们在飞鱼中看到的参考,吉姆非常清楚他希望他们的尾迹看起来像什么。防水性能对我们来说非常重要。

对于船只来说,这可能是一个更大的问题。这是我们知道我们必须掌握的事情。我们知道,如果您正在为一艘船制作动画,对于初学者来说,突然间您的地形是不规则的,因此您的船必须以不规则的方式行驶。但它也是一个很容易改变的地形;波浪相位可能有一个方向改变,突然间我们的工作需要重做。所以我们开发了一个系统,这意味着一艘船可以将它粘在水面上。如果你在波浪上缓慢行驶,它只会粘在波浪上,如果你开始加速,那么你就会开始获得你以更快的速度行驶时所期望的那种空气。

然后,水的密度是空气的 500 倍,所以如果你确实用那艘船击中了波浪,它开始飞行,重力作用在一种比它击中水时密度小得多的介质中,所以你需要知道这将如何减速。你需要计算浮力。

因此,即使在我们进入水和物体的耦合、相互模拟之前,我们也必须能够快速阻止这些东西。我们还必须能够快速迭代,并且我们必须确保我们没有做动画师容易做的事情,即坠入水中。我们的模拟是如此真实,如果你突然将一艘船深深地犁入水中,求解器会认为质量远远大于你的船的实际质量,并且会有水撞击镜头一百米开外。

所以我们开发了这些工具,知道那会到来。作为一个动画团队,我们与 FX 团队的联系比以往任何项目都要紧密。我们基本上是在一个并行的工作流程中工作,我们会在我们的动画工具上用我们的模拟做我们的工作,然后他们会做他们的工作。有时,您的一艘船可能离另一艘船很近,突然间您正在制造尾流,所以一旦尾流出现,就会开始影响旁边的船。我们将处于循环和耦合的工作流程以及模拟范例中。

Dan Barrett: They were fun creatures. We looked at flying fish predominantly, for their wing and ground effects and for their flight. Jim was quite clear on what he wanted their wake to look like, based on reference that we’d seen in flying fish. Water resistance was really important for us to get right.

That was probably even more of an issue with the boats. It was something that we knew we were going to have to be on top of. We knew that if you’re animating a boat, all of a sudden you’ve got a terrain that is irregular, for starters, so your boat has to travel in an irregular way. But it’s also a terrain that can very easily change; there might be a direction for a wave phase to change, and all of a sudden our work needs to be redone. So we developed systems that meant a boat could adhere it to the surface. If you were driving slowly over waves, it would just stick to the waves, and if you started speeding up, then you’d start getting the kind of air that you would expect if you are going at a greater speed.

And then, water is 500 times denser than air, so if you do hit a wave with that boat and it starts flying, it’s got gravity acting upon it in a medium that’s much less dense than when it hits the water, so you need to know how that’s going to decelerate. You need to calculate buoyancy.

So even before we got into the coupling of the water and object, simulated against each other, we had to be able to block these things quickly. We had to be able to iterate fast as well, and we had to make sure that we weren’t doing what it is that animators are prone to do, which is crash spectacularly into water. Our simulations are so real-world, that if you suddenly plow a boat deep into the water, the solver is going to think that the mass is far, far greater than your boat actually is, and there’ll be water hitting the lens from a hundred meters away.

So we developed these tools knowing that that was coming. As an animation team, we were more closely entwined with the FX team than we’ve ever been on any project before. We worked essentially in a parallel workflow, where we would do our bit with our simulation on our animation tools, then they’d do their bit. There were times when perhaps you’d have a boat that’s quite near another boat, and all of a sudden you’re creating wakes, so once that’s there, it starts affecting the boats beside them. We’d be in a circular and coupled workflow, as well as a simulation paradigm.

我最喜欢的镜头或镜头之一是当孩子们第一次犹豫地跳入水中跟随另一个部落时。它从水上到水下。那是如何实现的?

Joe Letteri:表演捕捉池是其中的关键,因为我们参考了他们在水中跳跃。因此,当它们落水时,您会看到它们的减速,当您看到它们在水下时,您会看到它们的正确行为,包括它们试图重新调整自己方向的方式。我认为这非常关键。

使它真正可信的另一件事是我们对气泡所做的工作。气泡比我们想象的要难得多。你有气穴现象,还有所有的空气被卷入头发和服装并被拖下,然后再次被释放并重新组合成这些斑点形状。

它们是非常熟悉的形状。同样,我们知道它们是什么样子的。你知道谁真正知道那些东西是什么样子的吗?吉姆。我们花了很长时间试图获得气泡流动的感觉。这不是我们的软件特别想做的事情,开箱即用,所以我们不得不重写很多代码来理解夹带空气的解决方案,因为你正在反转它。你是在水中做气泡,而不是相反。

这类似于当船撞到水面时 Dan 所说的,你遇到了气泡上升的另一个问题。它们漂浮在空中,但一旦它们撞到空中,从技术上讲,它们就是超音速的。模拟这种状态变化真的很难,所以我们不得不写一些东西来处理这个边界并在水面上获得正确的冒泡。这也推动了泡沫——我们称之为泡沫——特写,一大堆气泡。我们必须做的最困难的事情之一是沿着表面边缘产生那些气泡的一点点研磨水。

Joe Letteri: The performance capture tank was key to that, because we had the reference of them jumping in the water. So you’ve got that slowdown when they hit the water, you’ve got their proper behavior when you see them under the water, including the way they were trying to reorient themselves. I think that was pretty critical.

The other thing that made it really believable was the work we did on air bubbles. Air bubbles are much harder than we thought going into it. You’ve got cavitation, as well as all the air that gets entrained in the hair and costume and gets dragged down, before being released again and recombining into these blobby shapes.

They’re very familiar shapes. Again, we know what those look like. You know who really knows what those look like? Jim. We spent a long time trying to get that feeling of the flow of the bubbles. It’s not something that our software particularly wanted to do, right out of the box, so we had to rewrite a lot of the code to understand that entrained air solution because you’re inverting it. You’re doing bubbles inside of the water, not the other way around.

It’s similar to what Dan was talking about when a boat hits the water, you’ve got the other problem where bubbles are rising. They’re floating in the air, but as soon as they hit the air, technically they’re supersonic. It’s really hard to simulate that state change, so we had to write something to handle that boundary and get the correct bubbling on the water. That also drove into the foam–what we call foam–which is, close-up, a big collection of bubbles. One of the hardest things we had to do was that little bit of lapping water that creates those bubbles along the edge of a surface.

嗯,这导致了另一个最喜欢的镜头,这是一家人在一块岩石露头上重新聚集的地方。他们正从水里出来,在这块岩石上休息。他们是湿的,头发是湿的。那里也有蜘蛛。埃里克,我只是觉得这个镜头效果很好,因为,好吧,我不清楚你是否真的去拍摄过,可能是在某种岩石布景上,还是在某个海湾上。

Eric Saindon:你不知道的事实对我们来说是完美的。我们实际上有一个造浪池,我们在造浪池中射杀了杰克——那只是一块小石头,杰克在造浪池中。他从水里爬上来,坐在那块岩石上。我们使用了杰克和很多但不是全部的水,因为我们需要将水与背景水联系起来——将两种水融合在一起。然后是所有岩石和其他角色的整合。

那是一个很棒的场景,这显然是电影中重要的家庭时刻。围绕整个场景的表演非常壮观。对于水的整合和一切,那里确实有一点点。由于系统的构建方式,并且因为我们知道 Jim 想要什么以及波浪的节奏,我们能够将 CG 水和真人水连接在一起以驱动该序列并使其真正起作用。

Joe Letteri:在整合方面也付出了很多努力。你有这个连续的水面,从特写镜头到相机再到更远的地方。因为我们是用原生立体声拍摄的,所以没有地方可以隐藏它。值得注意的是,我们的深度合成系统在这方面帮助了我们——它在提取水面方面做得非常好。它无权在那个表面上工作得那么好,但它做到了!这确实帮助我们实现了整合。

Eric Saindon:是的,我们能够从水面本身创建几何图形,这使我们能够使用它来帮助驱动 FX 方面,因为我们知道混合需要在哪里以及如何使它们一起工作。

Eric Saindon: The fact that you don’t know works perfectly for us. We actually had a wave pool that we shot Jack in – it was just a little rock and Jack in the wave pool. He climbed up out of the water and sat on that rock. We used Jack and a lot but not all of the water, because we needed to connect that water with the background water – the integration of the two waters together. Then there was the integration of all the rocks and the other characters.

That is a great scene, it’s obviously an important family moment in the film. The performances around that whole scene are spectacular. For the water integration and everything, there really is a little bit of everything there. Because of the way the system was built, and because we knew what Jim wanted with the cadence of the waves, we were able to connect the CG water and the live action water together to drive that sequence and really make it work.

Joe Letteri: A lot of effort went into that integration, too. You’ve got this water surface that’s continuous, from close-up to camera and farther out. Because we were shooting it in native stereo, there was no place to hide that. Remarkably, our depth compositing system helped us there – it did a pretty good job of extracting the water surface. It had no right to work that well on that surface, but it did! And that really helped us get that integration.

Eric Saindon: Yeah, we were able to create geometry from the water surface itself, which allowed us to then use that to help drive the FX side, because we knew where the blend needed to be and how to make them work together.

最后,大家看到那个水面生物手拉紧皮带的镜头后,在网上引起了很多猜测,不知道是实用性还是CG。为了平息争论,你能告诉我那是真实的还是 CG 的?

Eric Saindon:有问题的镜头是真人和 CG。道具部门为 Kevin Dorman 制作了一个 Ilu 鞍座和背带,让他坐在舞台上的一个小水池里。凯文的手和前臂由莎拉·鲁巴诺 (Sarah Rubano) 参考 CG 模型中杰克的手臂绘制而成。吉姆·卡梅隆 (Jim Cameron) 随后能够获得他想要的性能,将带子缠绕在杰克的手上并与水互动。一旦我们在 Wētā FX 拿到盘子,我们就匹配移动动作并使用 Jake 手腕上方带子的 CG。我们在马鞍上以及手和手指周围使用了真水。CG 水用于扩展板并获得 Jake 身体在水中的相互作用。

Eric Saindon: The shot in question was both live action and CG. The props department built a Ilu saddle and strap for Kevin Dorman to sit on in a small pool on stage. Kevin’s hand and forearm were painted by Sarah Rubano using reference of Jake’s arm from the CG model. Jim Cameron was then able to get the performance he wanted for the wrapping of the strap around Jake’s hand and interacting with the water. Once we got the plates at Wētā FX, we match moved the motion and used CG from the straps above Jake’s wrists. We used real water over the saddle and around the hands and fingers. CG water was used to extend the plate and to get the interaction of Jake’s body in the water.

标签

声明:该资源由发布人:【微信小编】上传,点击查看作者主页其他资源。

CG模型网(cgmodel.com)内网友所发表的所有内容及言论仅代表其本人,并不反映任何CG模型网(cgmodel.com)之意见及观点。